Eyes on Anthropic as IT Prioritizes Interoperable MCPs

ETR Insights presents a moderated group discussion with senior technology executives who discuss how emerging model context protocols (MCP) let disparate AI agents speak a common language between internal systems and third-party software. Our panelists say that MCP standardization significantly reduces the effort required to scale pilots into production and speeds up prototyping so that new workflows reach users faster; MCP lets agents invoke external tools on live data and enables each use-case to pair with the most economical model, giving companies leverage over hyperscalers and SaaS vendors alike. Panelists also stress the importance of applying your own credential management, logging, and other safety guardrails to protect sensitive enterprise data involved in these operations. Read on to learn more about token-economics standardization, a single-pane-of-glass view for agent operations, and on-premises MCP deployments for highly regulated workloads.

Vendors Mentioned: Amazon (AWS) / Anthropic / Docker / Google (Gemini, GCP) / IBM (Watson) / Meta (Llama) / Microsoft (Azure) / Mistral AI / OpenAI (ChatGPT) / Palantir / Salesforce / ServiceNow

Key Takeaways

- Agnostic and Vendor-Neutral. Enterprises value MCP’s open and vendor-neutral approach, appreciating Anthropic’s independence from hyperscalers, which helps mitigate vendor lock-in risks. Anthropic’s focus on AI safety and constitutional AI further positions MCP as a trusted standardization effort.

- Interoperability Enabler. Panelists see MCP as essential for establishing interoperability among various AI agents, foundation models, and cloud providers. Enterprises are attracted to MCP’s promise of creating a universal, standardized framework for agentic AI deployment, reducing fragmentation and complexity.

- Efficiency Driver. MCP significantly reduces custom integration costs, streamlines development, and accelerates time-to-market for AI applications. Development teams benefit from quicker prototyping, fewer integration errors, and the ability to connect applications seamlessly without extensive coding.

- Security Clarification. While MCP itself doesn't inherently offer security, it facilitates the application of robust internal governance and security controls, allowing organizations to build security mechanisms around it. Enterprises must still rigorously implement their own controls. MCP guides teams in asking critical security and governance questions, especially in compliance-heavy environments.

- Data Democratization. MCP simplifies leveraging previously siloed enterprise data by facilitating direct AI-agent communication with vendor SaaS platforms, eliminating costly data duplication and transfer processes. Real-time, accurate data accessibility significantly enhances the value of enterprise AI applications, allowing businesses to respond more rapidly and effectively.

“MCP is Fundamentally a Bridge between AI Models and [Other Enterprise] Technologies”

Model Context Protocol (MCP) is an open standard that lets developers plug large language models directly into varied data sources and software tools through one secure, uniform interface.

MCP’s Emerging Role: a Vendor-Neutral Bridge. Anthropic’s fledgling MCP is gaining traction inside at least one global bank, on the promise of model-agnostic “plumbing” that gives AI agents a common language for talking to internal systems and third-party software. Panelists view Anthropic as uniquely positioned due to its independent stance compared to hyperscalers, reducing vendor lock-in risk. They praise its commitment to AI safety and governance principles, further building trust. “It’s framework for a situation where we find ourselves,” says one executive, “which is actually hedging the bets, working with multiple providers, and scaling some use cases ad pilots.”

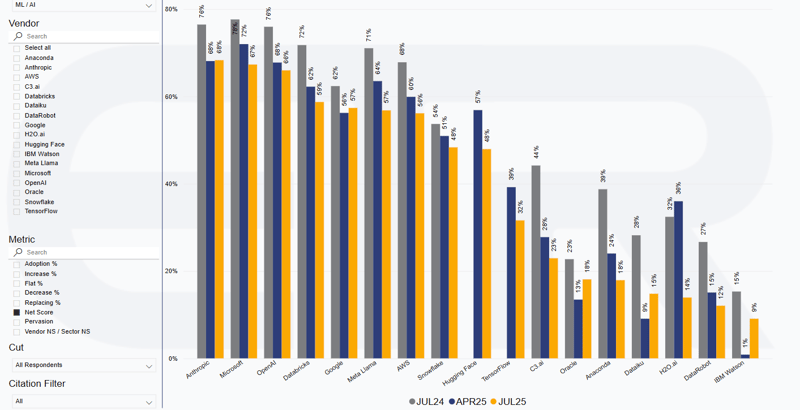

ETR Data: Overtaking Microsoft by 1ppt, Anthropic finds itself leading the ML/AI sector in Net Score, according to preliminary JUL2025 Technology Spending Intentions Survey (TSIS) data. The ML/AI sector still sees the highest average Net Score across all sectors in the survey, but is not impervious to the macroeconomic context, contracting by a relative 16%, or 10ppts, since last year.

Another panelist, who oversees enterprise architecture, said that MCP is “fundamentally a bridge between AI models and different technology, infrastructure, and services,” offering a standardized conduit for data exchange and, importantly, minimizing vendor lock-in (though most panelists still imagine hosting MCP servers in the cloud). “I would imagine a lot of other large enterprises are going to leverage their hyperscalers, similar to what we have done, in building those protocols that support the containers where you’re actually building these agentic solutions.” A third wants to offer customers a single point of entry. “Instead of APIs, how can we leverage this MCP and provide a standardized way, so the AI agents on the other side (our paying customers) can come in and access our data?” Moreover, as their vendors adopt the MCP standard, the company’s own AI agents can now tap third-party tools more easily, supercharging their internal ChatGPT-style services. Previously dominant in foundational models, OpenAI’s popularity within enterprises motivated the integration of MCP to simplify and accelerate deployments of internal generative AI applications. “It’s making our life easier, a way for us to now consistently be able to access and leverage their capabilities and their tools.”

Always a priority on any ETR panel: data security. While MCP standardizes interactions among AI agents via session controls and read-only modes, enterprises must engineer their own guardrails, such as limiting storage access, logging activity, locking servers in Docker containers, and managing credentials. “Let’s not overstate [MCP’s] purpose or mandate,” says one panelist. “You have to be mindful as an enterprise of those concerns and basically wire them into your MCP implementation.” “Security has to be designed, architected, and built in internally,” agrees another. “A lot of standard security protocols that we use, we’re sort of applying it here, before we expose this stuff out externally.” For one company processing hundreds of sensitive legal contracts, “the MCP server is something that we’ve deployed locally so that it doesn’t leave our premises. Deployment within contained ecosystems is an opportunity area for some of these functions, or some of these verticals, which have a significant footprint of a compliance and regulatory ecosystem.”

Reducing Complexity and Costs with Standardization. Panelists experimenting with MCP are converging on a common goal: AI systems that are modular, model-agnostic, and easy-to-scale. They are taking different paths to get there. One company is already standardizing token economics and data-flow layers, another is leaning on vendor-supplied MCP servers to open up private datasets, while a third is still preoccupied with testing protocols locally before moving to the cloud. Several invoke MCP “plug-and-play” design rules they had begun to draft anyway. “When we started working on agentic AI use cases—and prior to that, generative AI use cases as well – a lot of the focus is on, what are these design principles that we want to establish as foundational building blocks for architecture and design that we need to adhere to. Some of these design principles include aspects like having an agent or model-agnostic architecture.” One executive sees the technology taming development labor for standalone AI agents. “We were looking at scenarios where we have already developed and deployed internal ChatGPT-type solutions, and we were creating AI agents to go talk to these separate systems out there. It was becoming very labor-intensive, as well as difficult, and did not have consistency across different applications.“

“[MCP] gives us the ability to drive that standardization,” says one panelist, “not just with our model providers and our infrastructure providers, but potentially with the systems integrators and other service providers that we utilize within our entire ecosystem, to have a single pane of glass view on how we think through the various aspects of long-term, scalable, agentic AI model and application development.” At these companies, MCP connectors generally slot into existing DevOps pipelines, handled by current engineering staff. “I wouldn’t have exact FTE numbers required, but again, it doesn’t require a massive manpower-type of uptick, if an organization wants to start rolling and preparing infrastructure for that.”

Our guests imply that growing volumes of cloud-hosted data may render local analysis impractical, and that a standardized MCP protocol would keep costs in check vis-à-vis SaaS services. “The cost was tremendous, to develop all these capabilities where we want to run AI and some data analytics on this data that’s out there, but we have to go to [the SaaS providers], come back to our data, and restore this data. Everything that they have stored in the cloud, now we’re bringing it down and running AI on it.”

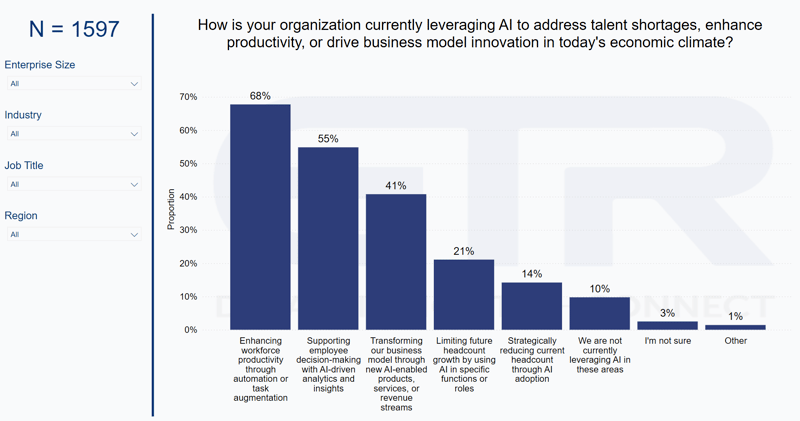

ETR Data: In preliminary Macro Views data, over two-thirds (68%) of IT decision makers say that they are enhancing workforce productivity through automation or task automation, while over half (55%) say they are supporting employee decision-making with AI-driven analytics and insights. MCP is another tool in the growing arsenal of ML/AI products, applications, and support infrastructure that enterprises need to reach these aims and support these environments.

Driving Organizational Change. Executives hope to pair each use case with the most cost-effective model, whether a proprietary leading model for text, a smaller open-source model for quantitative work, or a self-hosted option that keeps sensitive data in-house. “We aren’t at a point in the evolution of foundation models alone, where a single model ecosystem exists anymore,” says another. “OpenAI obviously had that for a brief point in time in 2023, and then obviously expanded it to Anthropic, Gemini, and everything else that you have, both within the proprietary model space, as well as all of the self-hosted models on your own cloud infrastructure, like Llama, Mistral and many others.” Panelists similarly hope to sidestep legacy “walled gardens,” and fend off pricing hikes by leveraging multiple models, though ideally without having to rebuild pipelines or duplicate data. “It’s not like we’re not cost-sensitive, but the main rationale now for us was actually not to be in a situation where someone would actually have too much leverage and undue influence.”

Of technical note, aside from cutting integration costs, MCP accelerates prototyping and lets AI assistants tap into real-time data instead of outdated training sets. “The other thing we’re finding out from the development community is that also, while we’re kicking the tires, they’re very easily and quickly able to prototype the integration of our AI application with these other applications, without a lot of custom coding.” Protocol adherence for vendors may soon no longer be optional. “Does Provider X support MCP, and have that natively built into everything that they’re enabling on their end? I think that can preclude providers who are not going to play ball with MCP.”

The panel agrees that once MCP gains broad traction, AI agents will help companies ship new workflows faster – “the time-to-market compression, I think, will accelerate even more,” moving beyond simple productivity boosts to more seamless, judgment-level handovers between human employee and algorithm. They do remind us, however, of the importance of client control over their data, as well as cultural fit. “Ultimately, we’re driving a process change, and we’re driving a people change as well. I think that has to go very much in sync with how fast the technology is evolving. Otherwise, we’re going to have a bit of a lagging indicator for the ability to realize those benefits and the ROI.”

Straight from Technology Leaders

We eliminate bias and increase speed-to-market by cutting out the middleman and going straight to the voice of the customer